Reduce Magento MySQL usage using compression

Most Magento production stores have a few clones used for development purposes; be it UAT, staging, development branches or simply disposable test sites. But with each and every clone - the storage requirements increase, which for the files, isn't too dramatic, just a few megabytes (excluding media) - but the database can be a whole other animal.

We tend to find that our average customer has at least 3 development copies at any given time of their store, so the storage requirements for MySQL are almost 4x what you would expect to be using

Database bloat

If caches were the giant plaster to be put over performance issues (read: caches don't fix performance problems!), then database compression is definitely the plaster for database swelling.

The typical Magento database has a lot of tables that can simply be "discarded" when cloned, such as,

| Table name | Safe to truncate |

|---|---|

| dataflow_batch_export | |

| dataflow_batch_import | |

| log_customer | |

| log_quote | |

| log_summary | |

| log_summary_type | |

| log_url | |

| log_url_info | |

| log_visitor | |

| log_visitor_info | |

| log_visitor_online | |

| report_event | |

| index_event | |

| report_viewed_product_index | |

| report_compared_product_index |

Truncate on dump/clone

Those marked with a warning triangle should be tested in a development environment to ensure that the removal of data doesn't have a negative impact on the store. In some cases, it can cause 404 errors to be displayed on product/category pages.

So of course, the first step when cloning a store can be to simply exclude these tables. If you dump databases with mage-dbdump.sh then a lot of tables are actually excluded, including those that would be unsafe to do on a production database.

dataflow_batch_export dataflow_batch_import log_customer log_quote log_summary log_summary_type log_url log_url_info log_visitor log_visitor_info log_visitor_online report_event index_event enterprise_logging_event_changes core_cache core_cache_tag core_session core_cache_tagSome tables do need to be retained though

But beyond this, there are index tables like core_url_rewrite that contain data that needs to be preserved, but can be huge in size. These are an ideal candidate for compressing.

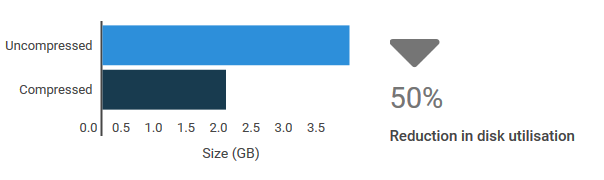

That's a whopping 50% reduction on disk usage. The impact of this, especially when using SSDs is really quite dramatic, because SSD performance degrades the more full the disk is, so reducing disk usage, will naturally improve performance.

Performance with compression

The biggest question is really going to be whether you'll see a negative impact from compressing the database, because all you'll be doing is trading a few extra £'s each month that you should be spending on larger disk drives, for your own time; and time is worth far more than money.

So in a very unscientific way we took the next store demonstration we were set to do and performed some tests on it. It was a fairly typical customer Magento store,

- Database size of 3.8GB

- 20,000 products

- 3,000 categories

- Running on a demonstration stack with 8-Cores, 32GB RAM and 1TB Enterprise HDDs.

After enabling compression, the results spoke for themselves,

Well, it was a given that compression was going to reduce utilisation, but 50% is excellent. Now, the important thing to check is the effect that has on performance.

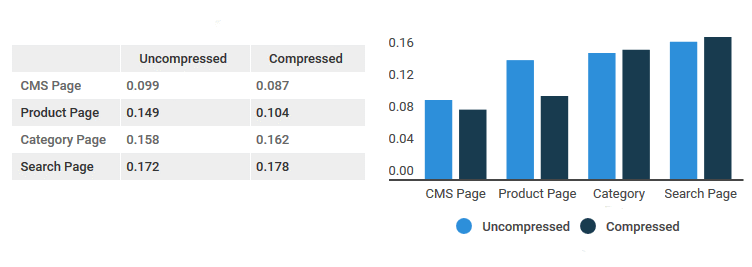

Now its getting interesting, we've got space savings and we've got negligible performance impact. In fact, we think that there could even be performance improvement from compressing the right tables, as the MySQL manual says,

Compression can help to improve both raw performance and scalability. The compression means less data is transferred between disk and memory, and takes up less space on disk and in memory. The benefits are amplified for tables with secondary indexes, because index data is compressed also.

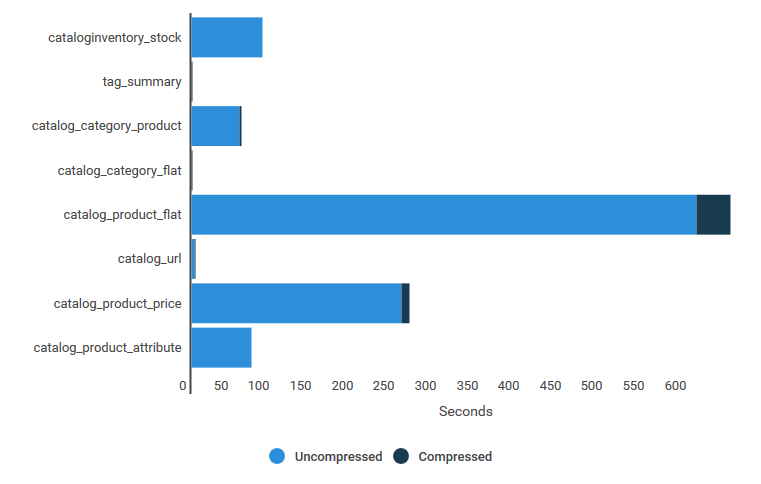

Lets move on to reindexing performance,

The compressed DB does perform up to 10% slower on some tasks, but its more than acceptable for a development/staging site clone. Now, we acknowledge that the test conditions were largely unclean (being a demonstration server) - and whilst the testing is accurate to decide the impact on a dev/staging clone, it isn't accurate enough to paint the picture of the impact on a product store.

But, it begs the question, "What if compression on a production store did improve performance?" And that's something we're going to set out to determine in our next article.

Let the compression begin

Compressing

-

Set the database name in a variable, so we can use it in future commands

DB_NAME="example_magliv" -

Connect to MySQL and collect a list of tables that should be compressed and store the result in a file,

for TABLE in $(mysql -Nsr -h db1.i -u root -p -e "SELECT table_name FROM information_schema.tables WHERE table_schema='$DB_NAME' AND engine = 'InnoDB' AND table_type = 'BASE TABLE';"); do echo -e "alter table $TABLE row_format=compressed;" done > commands.sqlThis requires the MySQL root user credentials

-

Connect to MySQL as a user with alter table privileges and set the table file format to Barracuda (required for compression),

mysql -h db1.i -u root $DB_NAME -p SET GLOBAL innodb_file_format='Barracuda'; -

Select the DB to be operated on and load the

commands.sqlfile we generated earlier,source commands.sql; - That's it. Sit back, relax and enjoy having a signficantly smaller database

De-compressing

-

Generate the decompression

command.sqlfile,for TABLE in $(mysql -Nsr -h db1.i -u root -p -e "SELECT table_name FROM information_schema.tables WHERE table_schema='$DB_NAME' AND engine = 'InnoDB' AND table_type = 'BASE TABLE';"); do echo -e "alter table $TABLE row_format=compact;" done > commands.sql -

Select the DB to be operated on and load the

commands.sqlfile we generated earlier,mysql -h db1.i -u root $DB_NAME -p source commands.sql;

FAQs

Is there any downside to a compressed DB?

CPU usage will increase, disk usage will decrease; this is because the CPU has to decompress/compress the data on access. If your stack has available CPU resources (<25% CPU usage is considered healthy) and is low on disk space, its well worth it.

Will I need to re-compress as time goes on?

The only time it will need compression re-enabling is if you restore an SQL file to the same database - for example if you were running the clone_store.sh script and used the dump and restore option, this would dump the database from the live site (which is uncompressed) and restore it to the specified database uncompressed.